One of the most challenging parts of software was always performance alongside resiliency , security and finally costs. I m giving this simple context just to varnish this article around different use cases and the complexities that caching brought us for the last decade.

As business grows the need for optimisation grows, we optimise the different design pillars by using technologies and trying to get a balance between tradeoffs and available options are as well important.

Caching get a lot off intention lately due to the provided caching mechanisms by vendorslike AWS, offering ElasticCache, CloudFront, File Cache and DynamoDB Accelerator. The burden of using this services was a lot tricky and I always was a bit lost while considering tradeoffs and here we are going to deep dive into those elements.

Caching needs

The need for caching varies based on scenario and use cases and as well the layer that a cache will be implemented. A caching importance can also depends to the layer that the improvement applies like Database, Application or client, also we can get further and think about geo-localisation.

Aside of the layer, we also must look at the gain that a caching system gives us, does it bring a gain in term of performance , cost or etc...

Caching Systems

There are a variety of caching designs that each applies to a particular need and scenario, here we gonna have a brief review of Caching models.

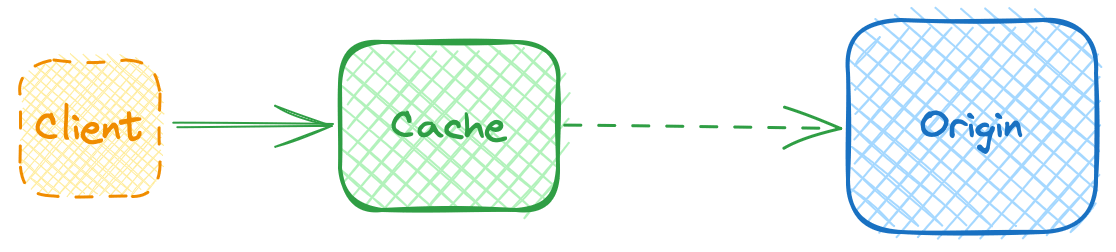

Cache Through

The Read through model helps to have a first cache approach , in this model all read requests hit the cache at a first stage letting to synchronise the cache with the source in case of a miss of data.

The cache through is a fit when the cache does not relies on complex requests and a light latency can be accepted.

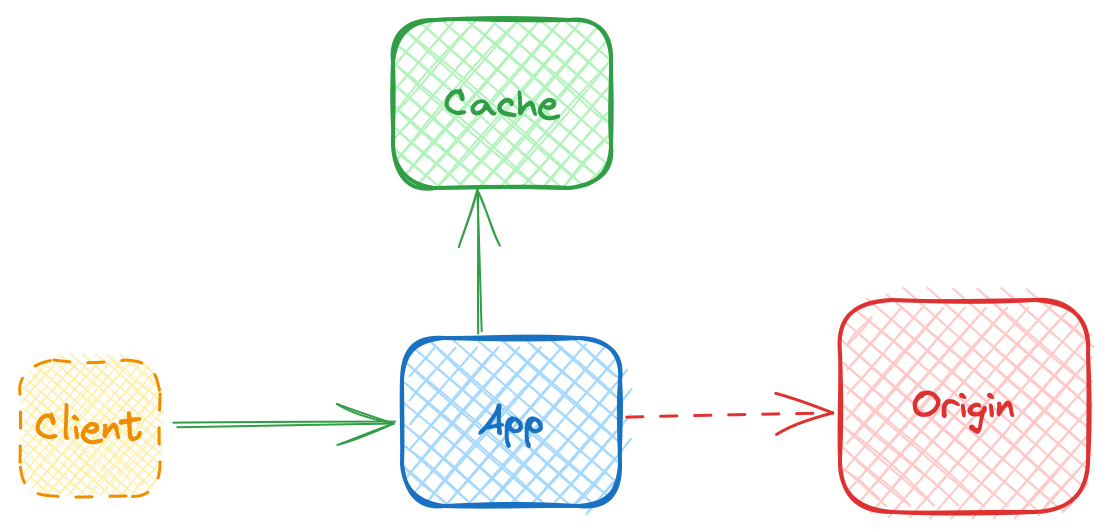

Cache Aside

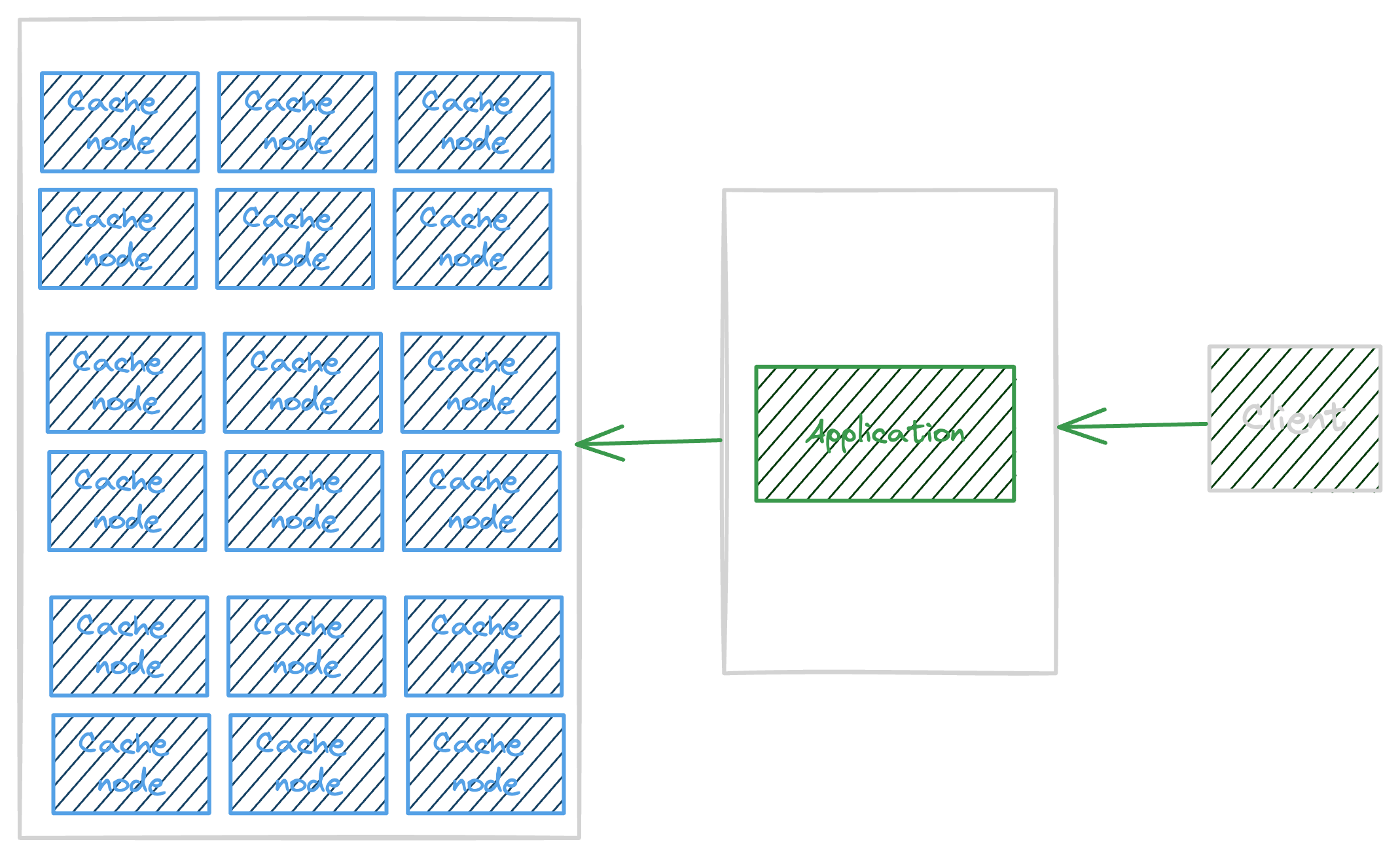

In the Read aside model, the cache is verified at application layer by checking the cache key from the cache and if not present fetch the data from source and synchronise the cache with fresh data.

Synchronisation

Synchronising the cache with the fresh data is important as missing the fresh data introduce inconsistency in system and lead to some level of degradation. This is important to consider that any caching system design to reduce the impact of introducing latency and performance issues, the sync of fresh data is done in background and in an asynchronous way, so when deciding to use a caching system the eventual consistency tradeoff must be acceptable.

Cache Storage

The Storage of a cache system can be In-Memory ( at the best case ) or Permanent Persistance and each type has its own challenges

InMemory: When caching in memory the loose of data can reduce a full refresh at cache key level, this means if you loose the cache storage any new request will hit the origin of your request ( api, database , etc .. ) and this can per scenarios have a low, moderate or high performance impact.

Permanent Persistance: The cache can be persisted permanently in kind of durable storage, but the durable storage must be always a high performant and fast I/O storage, already a permanent storage add latency in comparison with in-memory.

Always RAM was faster than Disk

AWS Offerings

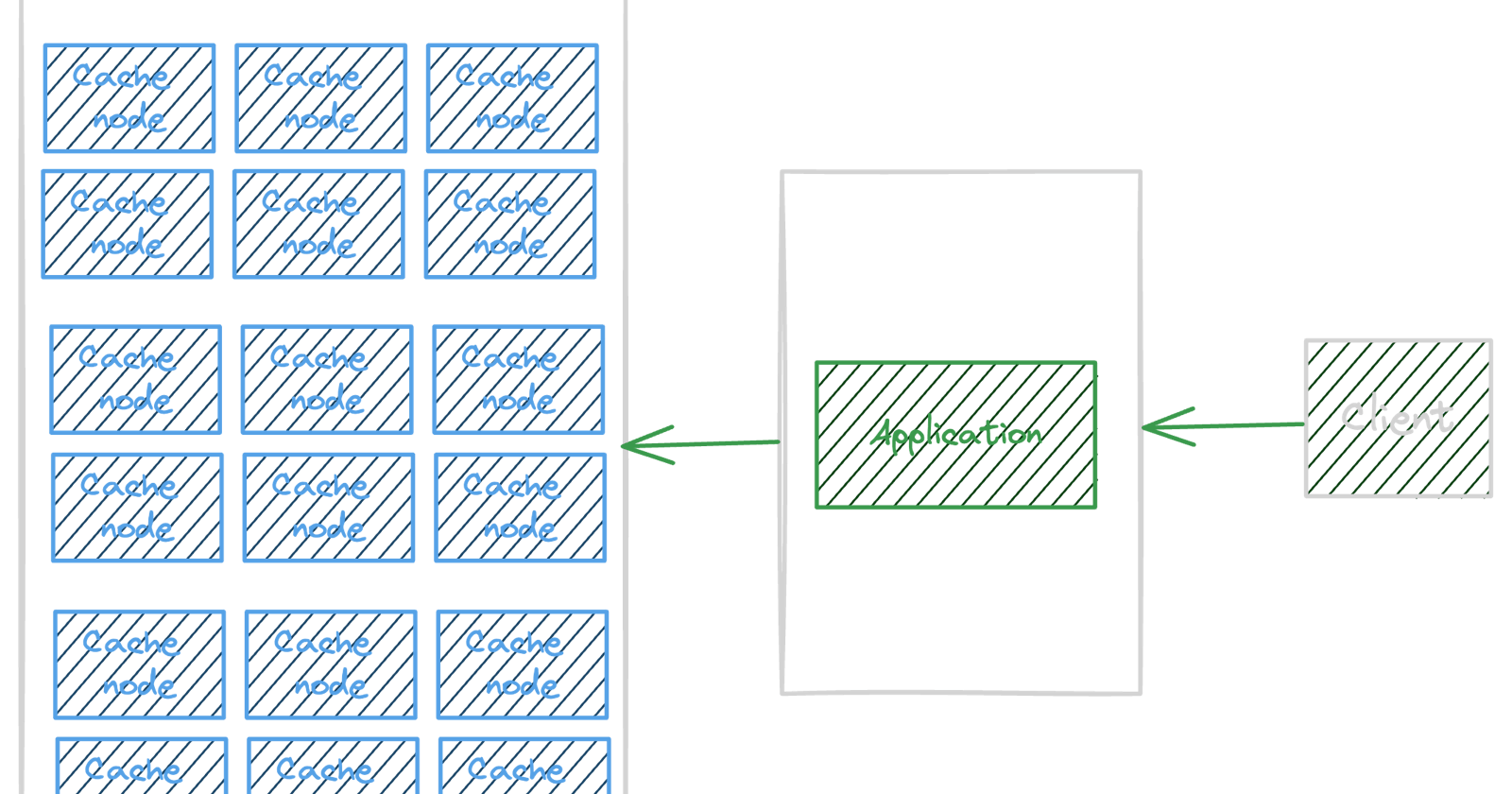

Amazon Web service offers a variety of caching systems but in serverless category the offerings are limited and we will dive into details next.

AWS offers following Serverless Caching systems:

Amazon CloudFront

Amazon ElasticCache

Amazon File Cache

AWS also has some services that due to their performance metric and SLA can be used as alternatives to the caching offerings, DynamDB and Amazon S3 Express are both highly performant and reliable solutions that can be used as a caching layer.

Amazon CloudFront

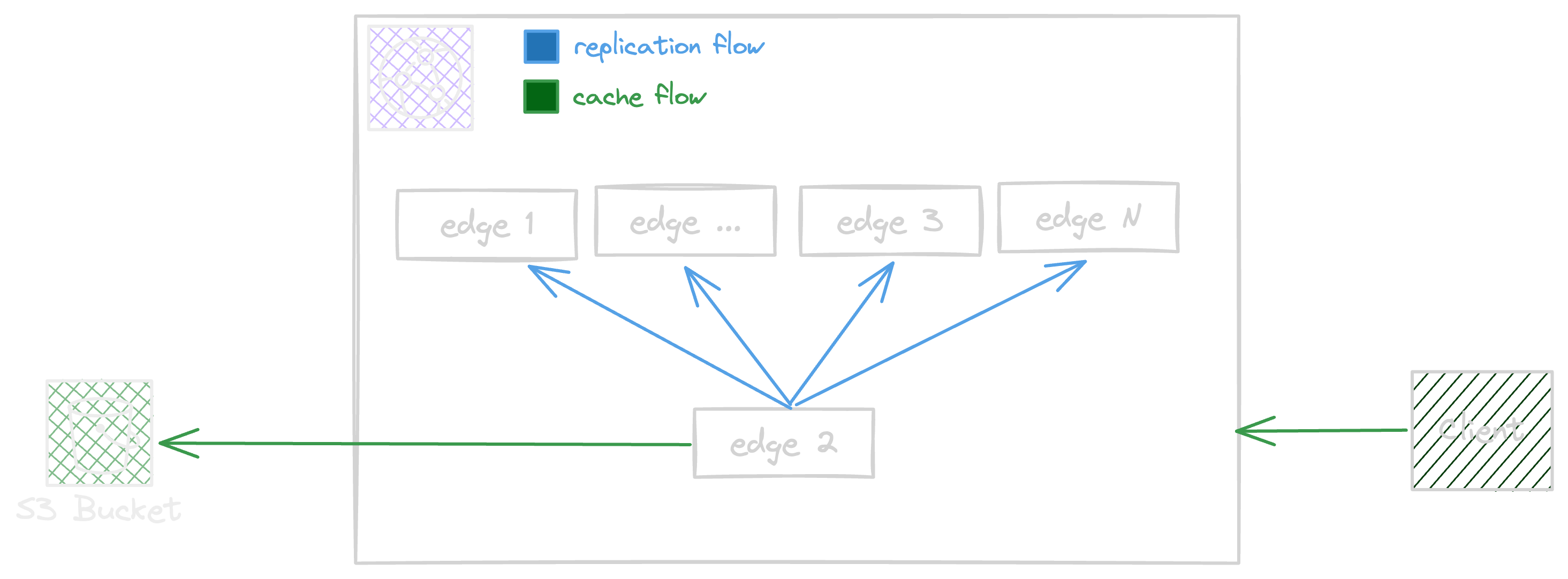

Amazon CloudFront is one the most known and used AWS cache offerings. Amazon CloudFront respond to the highest rate of Cache needs at Edge and offers geo localised caching. CloudFront is a Cache Through solution.

The CloudFront distribution do all the burden of cache implementation and do the replication behind the scene asynchronously between edge locations.

Considerations:

CloudFront covers just public layer of application

CloudFront introduces eventual consistency

CloudFront does not cache the request body

CloudFront does not detect origin changes till the cache expires

CloudFront Invalidation is a great feature but introduces a cost

CloudFront has the better cost négociation and reduction

CloudFront has too many commercial options to help reduce costs ( Just contact your TAM )

Amazon Elastic Cache

Amazon elastic cache offers a Serverless version of service that covers both Memcached and Redis engines.

Amazon ElasticCache covers the following caching strategies:

Lazy Loading ( Cache Aside )

Write Through

Considerations:

Amazon ElasticCache covers public and private layer

Amazon ElasticCache Offers a Regional Caching location

Amazon ElasticCache covers TTL and no eviction is available

Amazon ElasticCache TTL evaluation is based on east-recently-used (LRU) that can not be a fit for some scenarios.

Amazon Elastic Cache has a Minimum Pricing ( No Scale to 0 )

Amazon ElasticCache integrates with local Zones ( more here )

Amazon File Cache ( Fsx) :

Using FSx we can setup rapidly a Cache over s3 buckets and Amazon File Cache feed the Data Repository ( Cache Storage )

Considerations:

Amazon File Cache integrates with Lustre client ( more about here )

Amazon File Cache works with EC2, ECS and EKS

Amazon File Cache supports Cache Eviction by storage Fill Up or Manual Release

Alternatives

Actually in Aws Serveless ecosystem there are two serverless storage offerings that covers the need of Caching, these are great as they offers millisecond latency and offers a well defined High Availability and Durability

Amazon DynamoDb

The Dynamodb is a Serverless NOSQL database offering by AWS and one of the most used NoSql Databases. it offers a Ms level performance. as the service is a Key / Value service this is a good Match for a caching design.

You can implement a Cache Aside pattern with DynamoDB, with a great performance level.

Consideration:

Amazon Dynamodb is eventually consistent

Amazon Dynamodb can introduce costs for write intensive solutions

Amazon DynamoDB Costs can be higher for large Items

Amazon DynamoDb TTL often work rapidly but be deferred

Amazon DynamoDB supports VPC Endpoint for private connectivity from VPC

Amazon S3 Express One-Zone

Amazon S3 Express One-Zone is one of the most interesting offerings by AWS with a single-digit millisecond latency guarantee and an interesting Pricing reduction by 50%. This can be used for Caching purposes.

Considerations:

Amazon S3 Express One-Zone located in a single AZ has a risk of data lost in case of disaster ( who knows? ;) )

Amazon S3 Express One-Zone is better performing when keeping Application is the same AZ as Bucket and provides lower latency

Amazon S3 Express One-Zone to be accessed by lambda functions, needs to put the lambda in VPC

Amazon S3 Express One-Zone uses a Directory Bucket model and does not support all standard features of s3

Momento Cache

Do you need really implementing caching on your own, do you need know all these services and choose one that better fit you requirements? if your requirements change how easy can be the move from one solution to another?

Momento Cache offers nice Cache Aside offering system, with a simplified SDK.

learn more about and let experts bring you all you need: https://docs.momentohq.com/

Conclusion:

All options are valid options and based on tradeoffs a solution ca cover better the needs. Choosing between Cache Through or Cache Aside actually has no evident response and logically there is not logic to be compared.

If the performance bottleneck resides in database side a Cache Aside or Cache through based on cache item complexity and compatibility can be a choice.

Whenever the bottleneck resides in application side and impact clients the cache through is an option to offload origin and improve performance.

When looking at caching solution looking at costs is one of items to make a decision, but this costs depends on the benefits that will that caching solution bring for you.