I enjoy following the AWS Lambda runtime battle since I use AWS Lambda as part of my work. I have experimented with several runtimes including C#, Node.js, Python, and Java, and have learned a lot about their respective pros and cons. It has been an interesting journey with many movements between runtimes, but ultimately a great learning experience.

Lambda Runtime

Lambda functions run inside a container image for managed and custom runtimes. In both cases, the runtime prepares an execution environment, and the container provides a runtime interface to interact with it.

AWS lambda offers the following supported managed runtimes

NodeJs

Python

.Net

Java

Ruby

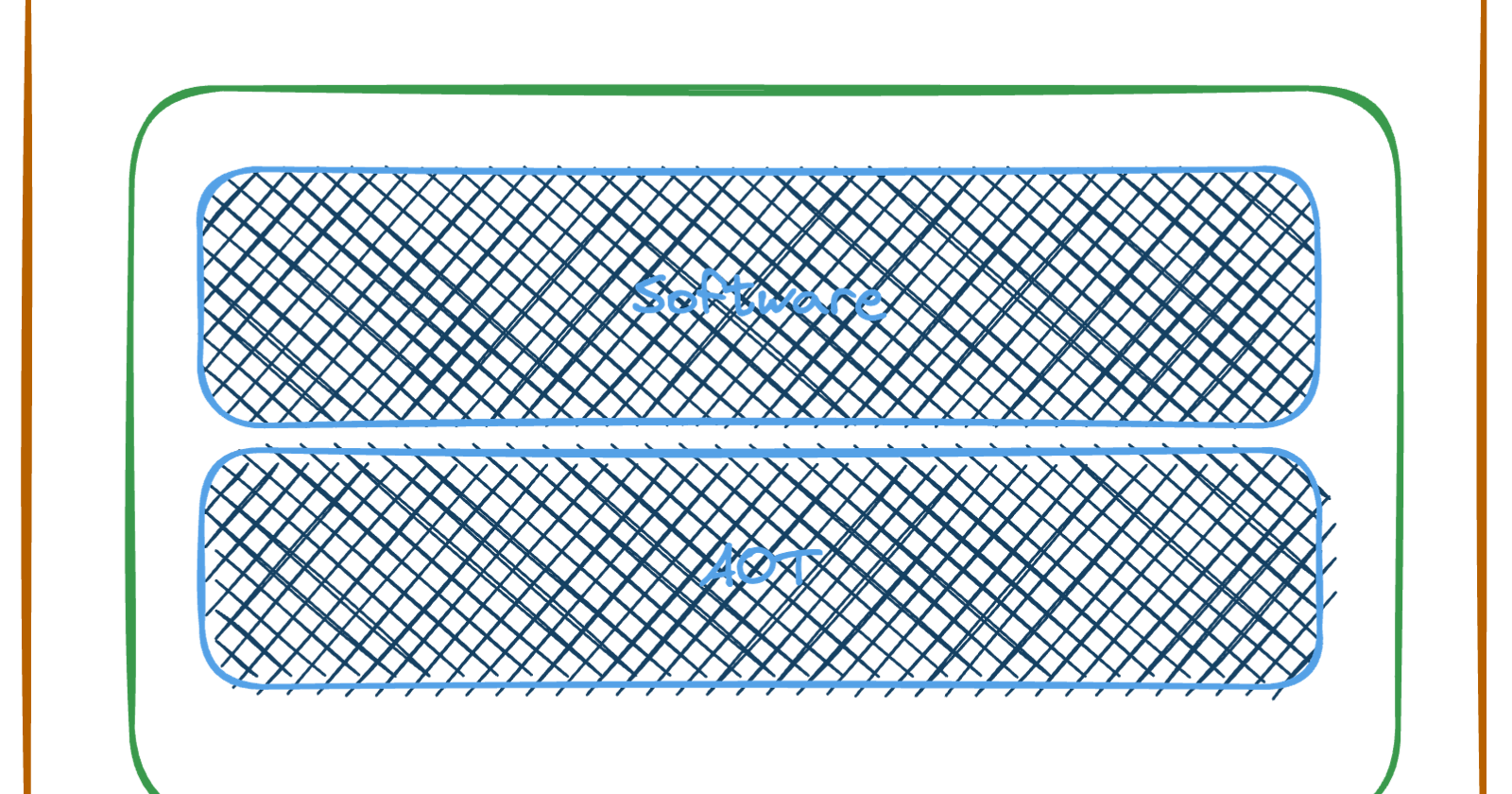

AWS Lambda provides OS-Only runtimes with a runtime interface integrated to offer an operational runtime. The OS-Only runtime is an excellent choice for ahead-of-time (AOT) compiled languages. On the other hand, custom images are perfect for runtimes not managed by AWS and those that are not AOT.

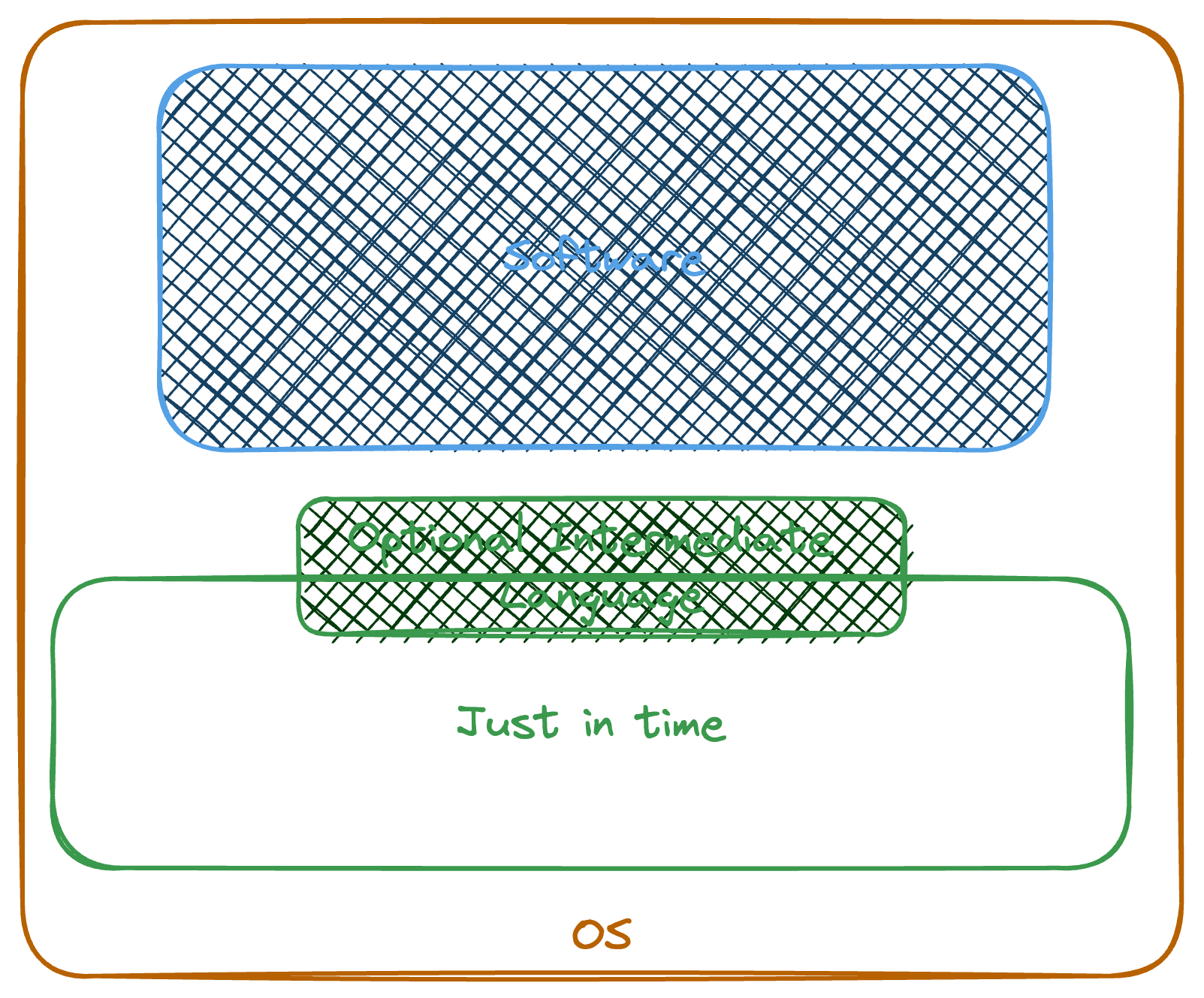

IL & JIT

Compilers serve the purpose of translating high-level languages into low-level languages for processors to comprehend. This process optimizes the code and enhances performance during runtime. In certain programming languages like C#, the compiler generates the Intermediate Language (IL) to optimize the runtime process and Just in Time (JIT) as a means of communication between the application and the processor during runtime. Although this method has proved to be effective, it requires a language-specific runtime to be available on the container at runtime. To see deeply this process , sharplab.io will help to visualize the different levels of interpretation of IL and JIT from a high-level language syntax (example here).

AOT

AOT, which stands for Ahead of Time, is a type of compilation process that helps to minimize the interpretation process at runtime. During the build phase, the AOT interprets the software code into a processor comprehensive language and creates a self-contained package. This package allows the software to run in any container without requiring a language-specific runtime to be available.

One of the great advantages of AOT is that it provides a way to interpret to a low-level language or for static languages to interpret to machine code. During the compilation, AOT applies the necessary optimizations that were traditionally done during JITing. However, as a disadvantage, the optimization cannot be done at 100% (but mostly done) because some optimizations must be evaluated at runtime. In this kind of situation, runtime JITing shows its proper advantages.

Experience of IL, JIT and AOT

In my experience, when working with CRUD applications, using AOT (Ahead-of-Time) compilation was the best option because there were no complexities that required JIT (Just-in-Time) compilation at run-time. However, in other scenarios where there were long-running, CPU-intensive calculations for large batches of data and effective parallelization was needed, using Dotnet6 runtime performed better. For transactional processing with small batches of data (20 items), AOT compilation was found to be better performing. I believe it would be worth revisiting and re-preparing the examples I worked on years ago and writing a dedicated article on this topic.

Rust & Go functions

I have only written a single function in Go throughout my entire experience, and I've never written a line of code in Rust either. However, I find it fascinating to follow the community and hear about these languages. Recently, I learned from Benjamen Pyle that Go and Rust are AOT and provide self-contained packages. This news made my day, and I tried to discuss it with my friends. Everyone was excited about it, and we decided to give it a try.

As a part of an experiment, I followed a blog post by Benjamin Pyle.

For my first lines of code in rust, I was volunteered to errors, I started to change a simple handler in rust adding an array and using some manipulation on that array, surprisingly I found that the compiler stopped me from producing the runtime race conditions or bugs in production, this is a great DX, I felt this behavior like a good linter but in reality it’s not just a linter and it s a real thing that I can not give it a name. I am discovering rust and see what is under the hood so I let you discover this DX and share your observation.

Node Js

Since I moved to AWS, I used mostly typescript ( Nodejs ) and I'm already happy about this jump from C# ( This was due to lack of performance in .netcore1 runtime in 2018 ). The Nodejs performs well in most scenarios and could tackle Python, the new versions are more performant, and in some benchmarks, I found nodejs close to Go ( Go performance is great and enough for me )

Nodejs Runtime is managed by AWS and the migrations from 10 to 12, 14, 16, 18, and now 20 are always done smoothly for me. The nodejs in most cases have a two-digit milliseconds execution duration and the cold start is acceptable in any business in which I was involved (the last time I observed the cold start rate it was ~0.06 % in the production account).

Back to 2018

Back in 2018 in our company, we moved from C# to nodejs for serverless solutions, there were points why we preferred to continue using C#.

500 C# developers and the move was not fast

Existing ecosystem, there was a wide range of internal c# libraries that helped the teams to apply easily the best practices.

Reuse of existing code, For a while, we pushed the hexagonal design in most of our complex software the reuse of business logic was easily achievable.

The risk of degradation while rewriting code was high and as we were adopting a fast delivery approach and a rapid migration it was preferable to use C#.

Living with NodeJs

There were no particular complexities and problems apart from stopping using the same logic in a new language. With nodejs we had a good-performing event-driven platform with optimised performance and less headache than before. The first benchmarking and results were surprising, for nodejs 300–500 ms cold start and 100–200 ms execution time vs c# based system of 200ms average in the existing container-based system. The gain was not evident but after going into production our observations was

The cold start happened occasionally

The Managed runtime and support for nodejs was faster than dotnet by aws

Typescript is great as we came from an OOP and static language world.

Project layout was simpler to evaluate and change in the JS ecosystem, we just all-time used tree-shaking, and everything went well

It was simpler to hire AWS serverless Nodejs profiles than C#

Keep going with NodeJs while suffering

We continued and will continue NodeJs for all the above-mentioned reasons. but there are suffering moments while using NodeJs.

Changing thousands of functions is not simple with every deprecation

Putting the Build & Run mindset was simple but the mindset for upgrading to the latest runtime is hard to settle for all staff

Every upgrade needs a whole regression test phase to be sure as the code failures are at runtime while everything goes well locally.

While the NodeJs V8 engine does some sort of compilation there is no way of having a 100% assured package vs dotnet as a compiled language.

Decouple the software from container details

This is the exact thing that happens when using AOT and this is possible for dotnet Go and Rust. This may be achieved for Nodejs with some over-engineering but to be honest I tried and never had success.

LLRT

Recently AWS provided the LLRT runtime that brings real optimisation and is very close to well-performing runtimes running in AWS lambda functions. The LLRT comes with some sort of limitations or restricted capabilities by design as mentioned here in the documentation.

The runtime provides the very basic functionalities as indicated in the documentation but for me, it covers most of my function needs.

More needed

As nodejs is around modules and packages If a function needs more capabilities for a use case we can add that package and put it as part of the function package. this is actually how it works for all packages I use.

Example

To start my experimentation I found a great repository letting me start my tests on CDK and include the llrt runtime in the bundling process.

The construct use a afterbundling hook to add the llrt as part of packages

afterBundling: (i, o) => [

// Download llrt binary from GitHub release and cache it

`if [ ! -e ${i}/.tmp/${arch}/bootstrap ]; then

mkdir -p ${i}/.tmp/${arch}

cd ${i}/.tmp/${arch}

curl -L -o llrt_temp.zip ${binaryUrl}

unzip llrt_temp.zip

rm -rf llrt_temp.zip

fi`,

`cp ${i}/.tmp/${arch}/bootstrap ${o}/`,

],

I created a sample repository for both NodeJs20 and LLRT lambda functions using CDK.

To deploy the functions just run following command

cd cdk && npm i && npm run cdk:app deploy

Looking at cdk path the .tmp folder represents the llrt bootstrap on ARM64

The example use Function url to simplify the testing

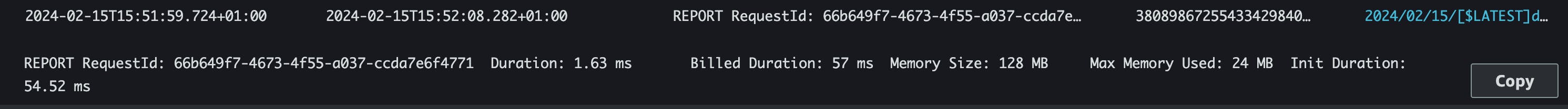

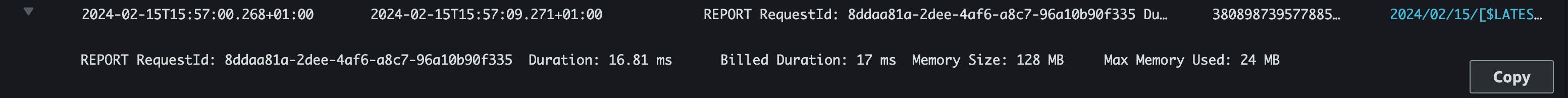

Invoking the LLRT function Url and looking at cloudwatch logs gives interesting insights

Init: 54.52 ms

Duration: 1.63 ms

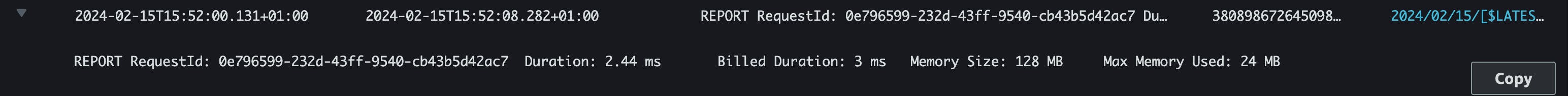

i was curious to invoke the warm container again and this was weird to me

Try again and again this was changing all over the time and in max duration i had a report as below

This is 7 times more than previous invocations but it is a great performance yet.

The execution environment while responding to requests permanently has a single-digit duration of around 1.4 to 1.9 ms, but if I leave the execution environment for a while and use the same execution environment again the first duration was 2-digit milliseconds but the average duration is always really interesting

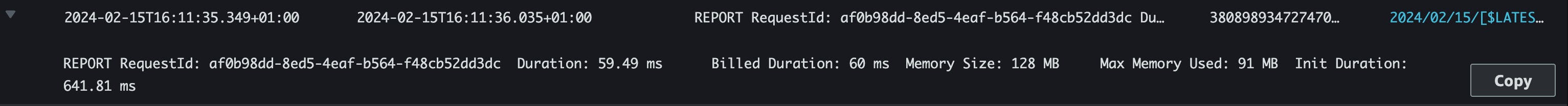

I would like to compare this with the managed Nodejs20 by invoking nodejs function function url

Interesting results, this is a classic nodejs function that I observe in production so no surprise, but trying to send more invocations was when I tried to focus more and more on details, something was interesting happening that I never experienced while observing Nodejs18.

The Nodejs 20 durations are great for the same code , i had same results as LLRT for managed runtime .

The other point got my attention was memory consumption.

LLRT: 20MB to 25MB

Nodejs: 89MB - 93MB

And a last point was the Billed Duration and this is normal as LLRT is not a managed runtime and you are billed for init phase + duration

Estimation

Finally i was curious to estimate the cost of running LLRT and NodeJS

As i had no more credits in my accounts i decided to estimate per pricing page by introducing a scenario but finally i decided to run a solid test using artillery

config:

target: https://XXXXXXXXXXXXXXX.lambda-url.eu-west-1.on.aws

phases:

- duration: 1200

arrivalRate: 100

name: "Test Nodejs 20"

environments:

production:

target: https://YYYYYYYYYYYYYYYYY.lambda-url.eu-west-1.on.aws

phases:

- duration: 1200

arrivalRate: 100

name: "Test LLRT"

scenarios:

- flow:

- get:

url: "/"

Memory Configured : 128Mb

Architecture: ARM64

Request count: ~6 000 000

After using artillery to do a spike test and verifying the results with following cloudwatch log insight query

filter ispresent(@duration) |

stats sum(@billedDuration), sum(@maxMemoryUsed/1024/1024), count(*) as requestcount

| Runtime | Duration GB-Second | Price | Request | Price |

| NodeJs 20.x | 19001 | ~0.2533 | 6 090 000 000 | 1218 |

| LLRT | 19783 | ~0.2637 | 6 090 000 000 | 1218 |

This shows off well that the pricing is close and in kind of typical business with these sorts of loads, it is just equivalent. To be honest, the LLRT is in the experimental phase and I will never adopt it for production but what LLRT offers we can achieve using Managed Runtimes, the only GAP is a self-contained package that can be handy in lots of use cases ideally for software code that I run.

A final word

Often, in companies, we define a tech radar that considers all historical, strategic, and technical aspects. The last consideration is the plan. There are a lot of details to decide whether to adopt a programming language, tooling, or vendor services. The cost of moving to a new programming language is often higher than improving the practices and optimization (at least in my experience), but the fact that tech grows is evident and if we do not question ourselves internally, or externally, we will never grow.

Going to Rust is a nice move. Using LLRT is fascinating. Go is great and C# has and has for me the greatest and cleanest syntax I have found in some languages I have worked with, like Node.js, Python, F#, Delphi, VB and C++, and Java, a lot close to Java, but I moved to Node.js ecosystem after 20 years of .NET programming just because of tradeoffs and the tradeoffs are everywhere. But approving tradeoffs as a fact is also important. Do we stop c# or Serverless ? For sure, c# is the loser in this game. Do we adopt LLRT, or do we improve our current software? For sure, improving is faster than moving to LLRT and doing lots of time-consuming tests.

The adoption of new things in a business is not for fun and must have an impact on the system.