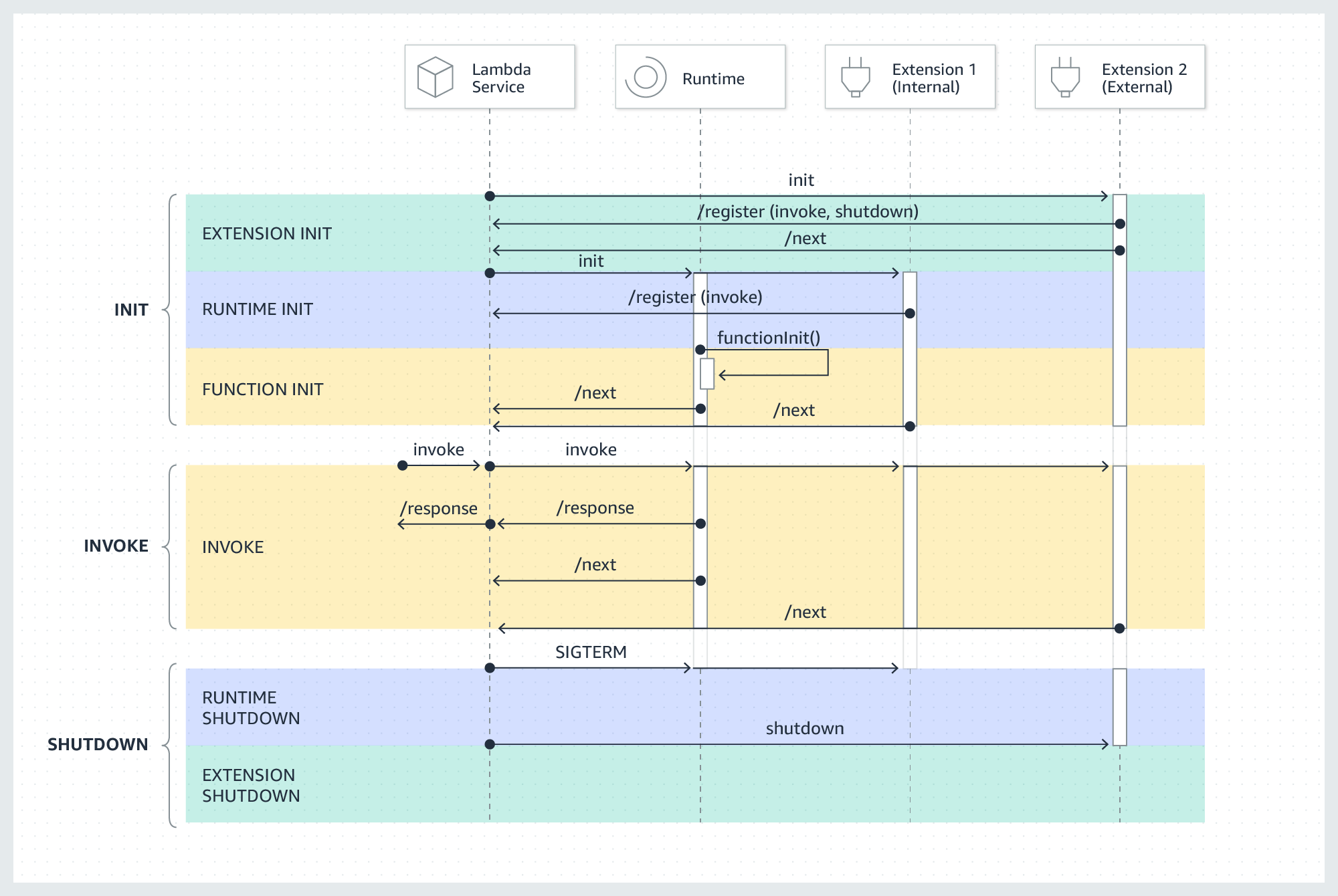

Using the Lambda extensions necessitates establishing a connection between the process and the extension API via register() and next() calls. While this seems straightforward, there are caveats to details that can cause interest to be lost in the already initiated MicroVM and lead to the experiment of a new initialization. The following figure illustrates how the Lambda extension is involved during different lifecycle phases.

Telemetry Api Connection

When an extension handshakes with Telemetry Api a connection is established and kept alive during the lambda execution environment lifecycle. This allows the registered connection to be reused for future lambda invocations, hence the next() method calls.

Proactive Initialization

Proactive initialization is the way the aws lambda service helps to reduce cold starts by predicting the required load for any function based on the past data. While this is useful it can introduce some race conditions.

Node Native Fetch

Node Native fetch is an interesting option as it doesn’t add unnecessary and external dependencies to the bundle and does the job well. The Fetch is a node Global Api allowing some standard and lightweight http communications, but it comes with its proper characteristics. Fetch uses the undici package which is a from-scratch implementation http-client. But fetch comes with a 5-minute timeout limit and this can not be modified like axios or equivalents.

Connection Timeout

Putting previous details together, in case of proactive initialization if the invocation reaches the lambda after a while ( explicitly 5 minutes ) the extension will experiment with a timeout and will crash. Thanks to the isolation of the extension and handler this will not fail the function invocation but will reinitiate the execution environment. This is on the paper case but I’m not sure about that safety as my lambda functions had error metrics fed.

My implementation was from the exact Lambda sample in aws-samples repository here. Knowing that Amazon Q, Copilot, and the internet could not assist me, I jumped into reading all the docs and looked deeply into Fetch, undici, and extension code.

Resolving the problem

Finally, I found the undici globalDispather and looked at it to identify if it matches my needs. assuming, the lambda service must take care of the execution lifecycle, it sounds like a good candidate to resolve the extension problem.

The following snippet shows the simple implementation to tackle the timeout problem.

import { setGlobalDispatcher } from 'undici';

export const CONNECTION_TIMEOUT_MS = 60 * 60_000;

// Fetch is a global native api

// There is no possibility to configure the agent per request

// So we need to set the global agent configuration

// This is a workaround to globally set the agent configuration

setGlobalDispatcher(new Agent({

connectTimeout: CONNECTION_TIMEOUT_MS,

headersTimeout: CONNECTION_TIMEOUT_MS,

bodyTimeout: CONNECTION_TIMEOUT_MS,

keepAliveTimeout: CONNECTION_TIMEOUT_MS,

keepAliveMaxTimeout: CONNECTION_TIMEOUT_MS

}));

Source Code

Conclusion

I wonder if no other AWS customer faced this issue and if so also wonder about how long the aws-samples example can stay as a blueprint for customers and introduce frustration. I’m not arguing but showing my frustration for days and teams struggling from those error metrics.

I dont have more to add here, but I’ll raise a PR on the provided example to prevent others have the same experience as me .

Enjoy Reading