EventCatalog became a preferred cataloging tool for event-driven architecture because of its simplicity and structured way of organizing the specifications at enterprise, but also by the extensibility provided using plugins. It works well in many enterprises but has yet some improvement gaps to be applied and the community is working actively on new ideas and enhancements to bring more simplicity at any scale.

I have been using EventCatalog for 3 years now, but this time I tried to use the EventCatalog to simplify the service documentation transparently and automatically, and I had some deep thinking moments about how I can make it as suitable as it can be based on my own needs.

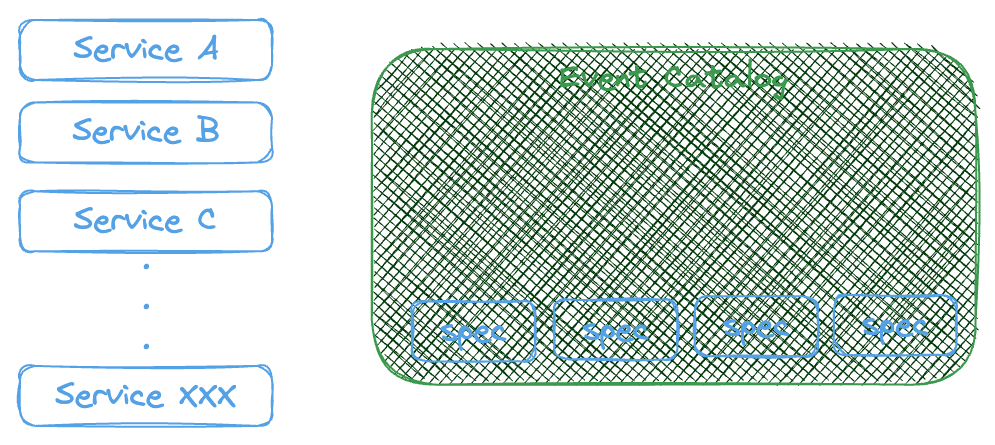

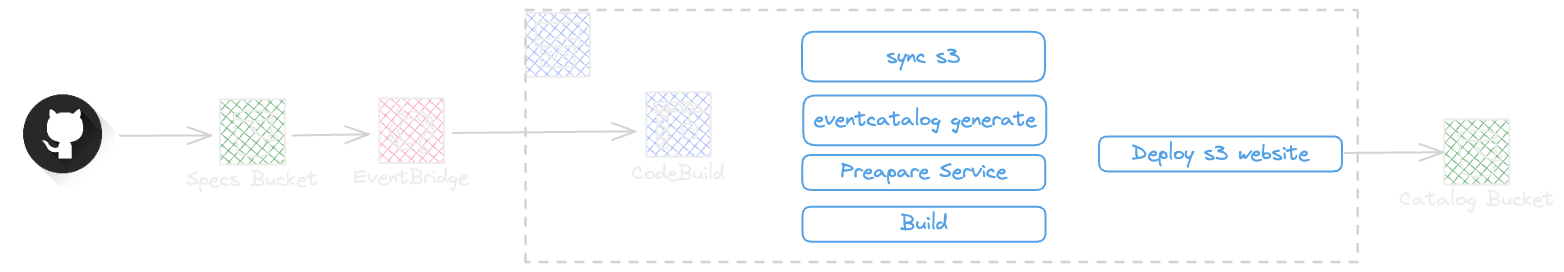

Here, the way i would like to manage the cataloging is as a central engine as illustrated below.

However, the way that EventCatalog was designed was about a central source of trust, and this was not the way I was looking for a cataloging solution as I have preferred always to keep the documentation as part of the service source control system and in the same repository service resides. This is the operational model of EventCatalog and seems OK but the enterprise ecosystem often has other constraints during adoption.

The actual operational model of the EventCatalog seems enough standard but I need to consider also the Software development practices. so I decided to stop waiting and make something operational based on our practices and using EventCatalog.

AsyncApi Extension

First, I tried to jump for a PR on EventCatalog AsyncApi Extension Github, but I just had the solution open for 1 week without finding time to start (As I am often in meetings ). EventCatalog AsyncApi plugin works on a generator concept in EventCatalog by letting have some simple config in the default js config file under eventcatalog.config.js name.

const path = require('path');

module.exports = {

title: 'EventCatalog',

tagline: 'Discover, Explore and Document your Event Driven Architectures',

organizationName: 'Your Company',

projectName: 'Event Catalog',

...

generators: [

[

'@eventcatalog/plugin-doc-generator-asyncapi',

{

pathToSpec: [

path.join(__dirname, '../specs/Order/placement/1.0.0', 'asyncapi.yaml'),

path.join(__dirname, '../specs/Order/Shipment/1.0.0', 'asyncapi.yaml')

],

versionEvents: false,

renderNodeGraph: true,

renderMermaidDiagram: true,

domainName: 'Order'

},

],

[

'@eventcatalog/plugin-doc-generator-asyncapi',

{

pathToSpec: [

path.join(__dirname, '../specs/Product/stock/1.0.0', 'asyncapi.yaml')

],

versionEvents: false,

renderNodeGraph: true,

renderMermaidDiagram: true,

domainName: 'Product'

},

],

]

}

Having the generators, the npm run generate command will read all specifications and generate the respective Domain, Events, and Services under domains folder.

Complexity

We can start asking any service owner team to do a Pull Request when new services are created or changes are necessary but this approach is against our learnings from the past when dealing with software that was managed by multiple teams, in the best case, this can work at some scale, but can become frustrating in the worst case.

Another challenge I encountered was, how big the EventCatalog config file can become when there are already hundreds of services in around 10 domains without counting internal modules communicating in an event-driven way, and this will be just a nightmare to live with.

Listing Desired State

Finally with all the blocking points and having a list of possibilities that the actual state of EventCatalog does not cover there was no starting point without listing what I need.

Specification under the service ownership.

No effort more than having updated specifications in the service repository.

Each service is responsible for formatting and validating specifications.

Specifications must follow the governance conventions.

A catalog to represent all events and service specifications.

The catalog must be in sync with the real service specification.

The catalog integration must be automated and autonomously.

Catalog must be reproduced rapidly.

Catalog Design

To define the catalog design, the considerations were as below

For simplicity Static and bundled

Respond to changes based on events

Decoupled and without adding hard dependencies

Autonomous

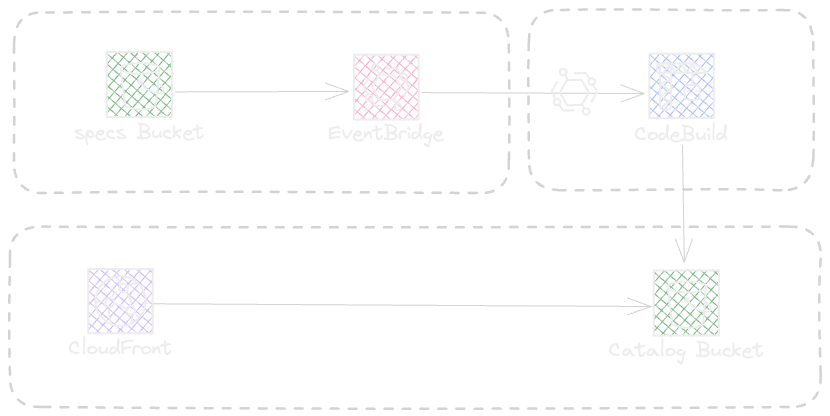

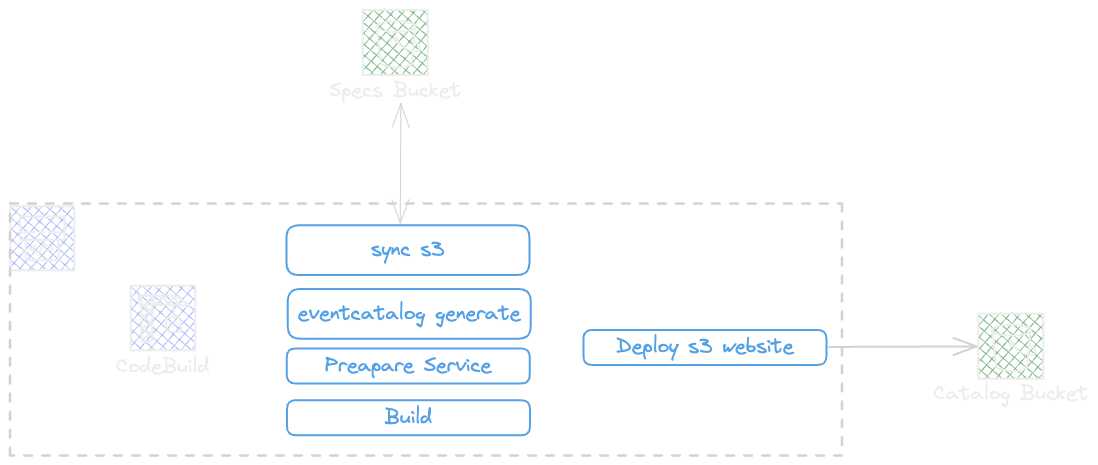

The final design is represented in the following diagram

Source Code

Build Configuration

It was simple to build the configuration dynamically by just adding a nodejs script as explained below.

The config generate is a simple script that does the following steps:

Get all yaml files in specs folder

Feed the generators array by creating a generator element per specification

Merge the default config and generators

Write the results and rewrite the

eventcatalog.config.jsif presents

const baseConfig = { ... }

const generatorDefaultConfig = { ... }

const createGenerators = (specsFolder = 'specs') => {

const schemas = getDirectories(specsFolder)?.

sort((a, b) => b.localeCompare(a) ).

reverse().

filter((fileName) => fileName.includes('.yaml'));

if (!schemas) return [];

let asyncApiGenerators = [];

schemas.map((schemaName) => {

asyncApiGenerators.push([

'@eventcatalog/plugin-doc-generator-asyncapi',

{

...generatorDefaultConfig,

domainName: schemaName.split('/')[2],

pathToSpec: [ path.join(__dirname, `${schemaName}`) ]

},

]);

});

return asyncApiGenerators

}

const generators = createGenerators('../specs');

fs.writeFileSync('./eventcatalog.config.js',

`module.exports = ${JSON.stringify({

...defaultConfig,

generators

}, null, 2)}`, 'utf8');

Running the script can be done by adding a new script in package.json that runs the node ./config.generator.js. The package json scripts section will be represented as below.

{

...

"scripts": {

"start": "eventcatalog start",

"dev": "eventcatalog dev",

"build": "eventcatalog build",

"pregenerate": "node ./config.generator.js",

"generate": "eventcatalog generate"

},

By prefixing the script name by pre the npm will run automatically the pregenerate script when we run npm run generate, this is handy to keep the cicd scripts un-touched and extending the capabilities.

Specifications

About Specifications there was two considerations, first each service takes ownership of its documentation and specification, second the catalog must be autonomous. by these facts i ended up 3 correlated notes.

EventCatalog project available options be used, so the project will continue to use a local based spec source for generation to avoid any complicated personalisation in catalog project repository or in CICD pipeline.

version: 0.2 env: parameter-store: SPEC_BUCKET_NAME: /eventcatalog/bucket/specs/name phases: install: commands: - echo Installing dependencies... - npm cache clean --force - cd catalog && npm install --froce && cd .. pre_build: commands: - echo "Pre build command" - rm -rf specs - mkdir specs - aws s3 sync s3://$SPEC_BUCKET_NAME/ specs build: commands: - cd catalog - npm run generate - npm run build artifacts: files: - '**/*' base-directory: catalog/outThe Local source of catalog must be updated and synced with all services by in a decoupled manner. using a GitHub action to sync any repository with catalog s3 on a change in the specs folder.

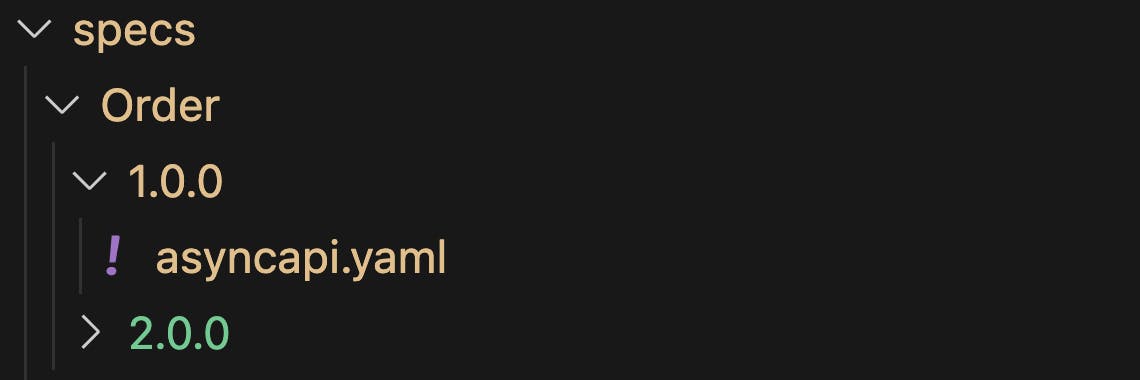

name: AsyncApi Spec Sync on: push: branches: [ main ] paths: - 'specs/**' env: AWS_REGION: eu-west-1 jobs: sync-spec-to-s3: runs-on: ubuntu-latest steps: - name: Checkout uses: actions/checkout@v4 with: fetch-depth: 0 - name: Configure AWS Credentials uses: aws-actions/configure-aws-credentials@master with: aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }} aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }} aws-region: ${{ env.AWS_REGION }} - name: Sync spec to S3 run: | aws s3 sync ./spec s3://$(aws ssm get-parameter --name "/eventcatalog/bucket/specs/name" | jq -r '.Parameter.Value')/Each Service register documentation in a conventional way, being a root level specs folder, subfolder named by domain, and includes a folder representing the version inside domain.

Service integration

it will be simple by copying and pasting the workflow in all services, and after any changes in specs folder the specs folder will be synced by catalog specs s3 bucket,

The S3 changes will trigger the CodePipeline using EventBridge default bus. the pipeline will process the catalog and spec sync, the following diagram demonstrates the actual code pipeline workflow

Conclusion

This approach was one of the fastest and simpler approach to have a result with no complexity. but again i m looking to tackle some pain points to make EventCatalog more suitable.

I could add a wrapper around EventCatalog but i found this approach toward a wrong direction, the reason is that leads to extra complexity for a single place usage in the whole enterprise and hides a lot of intention of EventCatalog existence.

The last point for me to tackle is how to automate the Markdown consumers section fill up. as this approach focuses on documentation and specifications the gap of consumers was not the priority on my side but this is a thing i actively think of to achieve system landscapes from EventCatalog.

Enjoy Reading